This chapter is testing the Llama 2 easily.

I am going to deploy web service using FastAPI and Llama 2.

Run web server

git clone https://github.com/choonho/llama_server.git

cd llama_server

pip3 install llama-cpp-python langchain

pip3 install fastapi uvicorn

Prepare Llama 2 model file

In step 2, we created Llama 2 model file, copy to "models/7B/ggml-model-q4_0.bin"

Run Server

python3 server.py

Test (Q&A)

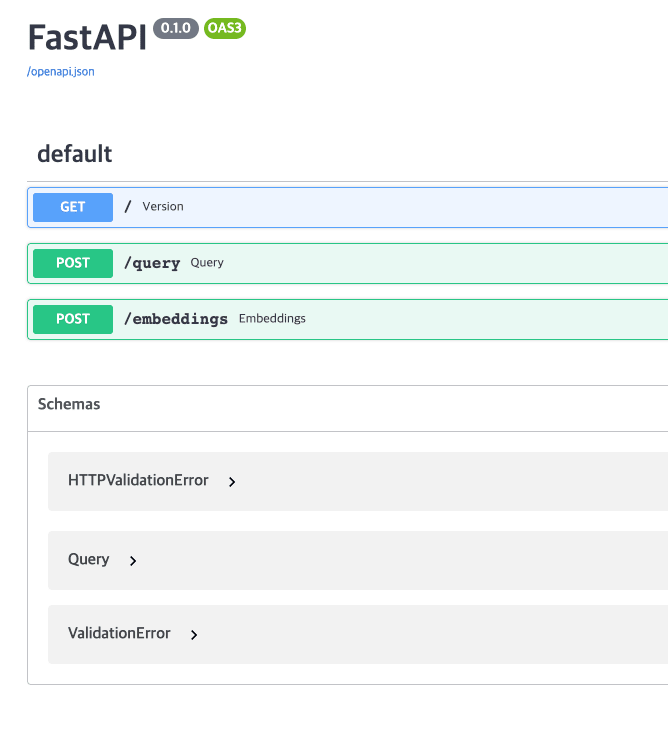

Open the web browser, https://localhost:8000/docs

FastAPI provides easy way to test API.

Click "Try it out"

In the "Request body", ask your question.

Series

Llama 2 in Apple Silicon Bacbook (1/3)

https://dev.to/choonho/llama-2-in-apple-silicon-macbook-13-54h

Llama 2 in Apple Silicon Bacbook (2/3)

https://dev.to/choonho/llama-2-in-apple-silicon-macbook-23-2j51

Llama 2 in Apple Silicon Bacbook (3/3)

https://dev.to/choonho/llama-2-in-apple-silicon-macbook-33-3hb7